SLMs vs. LLMs: Finding the Perfect Fit for Your Business Challenges

- Victor Aynbinder

- Technology, AI, Tools & Tips

- 18 Dec, 2024

Artificial intelligence is advancing rapidly, and small language models (SLMs) are becoming a key focus. While large language models (LLMs) have been widely discussed, SLMs are quietly emerging as a versatile and practical alternative for many real-world applications.

Info

Small Language Models (SLMs - with fewer than 10 billion parameters) are compact AI models designed to process and understand language efficiently, using significantly fewer computational resources compared to Large Language Models (LLMs - with tens to hundreds of billions of parameters). They are highly cost-effective, faster, and tailored for specific tasks, making them ideal for businesses seeking privacy-friendly, scalable, and efficient AI solutions.

The Case for Small Language Models

At EduLabs, we believe SLMs are gaining traction because they address key challenges associated with large-scale AI. These models prioritize efficiency, adaptability, and accessibility, making AI more practical for businesses and users alike.

1. Cost-Effectiveness:

Unlike LLMs, which require significant computational resources, SLMs can function efficiently on standard CPUs and cloud-based systems. This makes them an affordable choice for startups and enterprises alike. These models are designed to provide robust AI capabilities on commodity hardware without the need for expensive GPUs or specialized infrastructure. Organizations worldwide are using SLMs to develop cost-effective solutions for industries such as healthcare, education, and local businesses.

Unlike LLMs, which require significant computational resources, SLMs can function efficiently on standard CPUs and cloud-based systems. This makes them an affordable choice for startups and enterprises alike. These models are designed to provide robust AI capabilities on commodity hardware without the need for expensive GPUs or specialized infrastructure. Organizations worldwide are using SLMs to develop cost-effective solutions for industries such as healthcare, education, and local businesses.

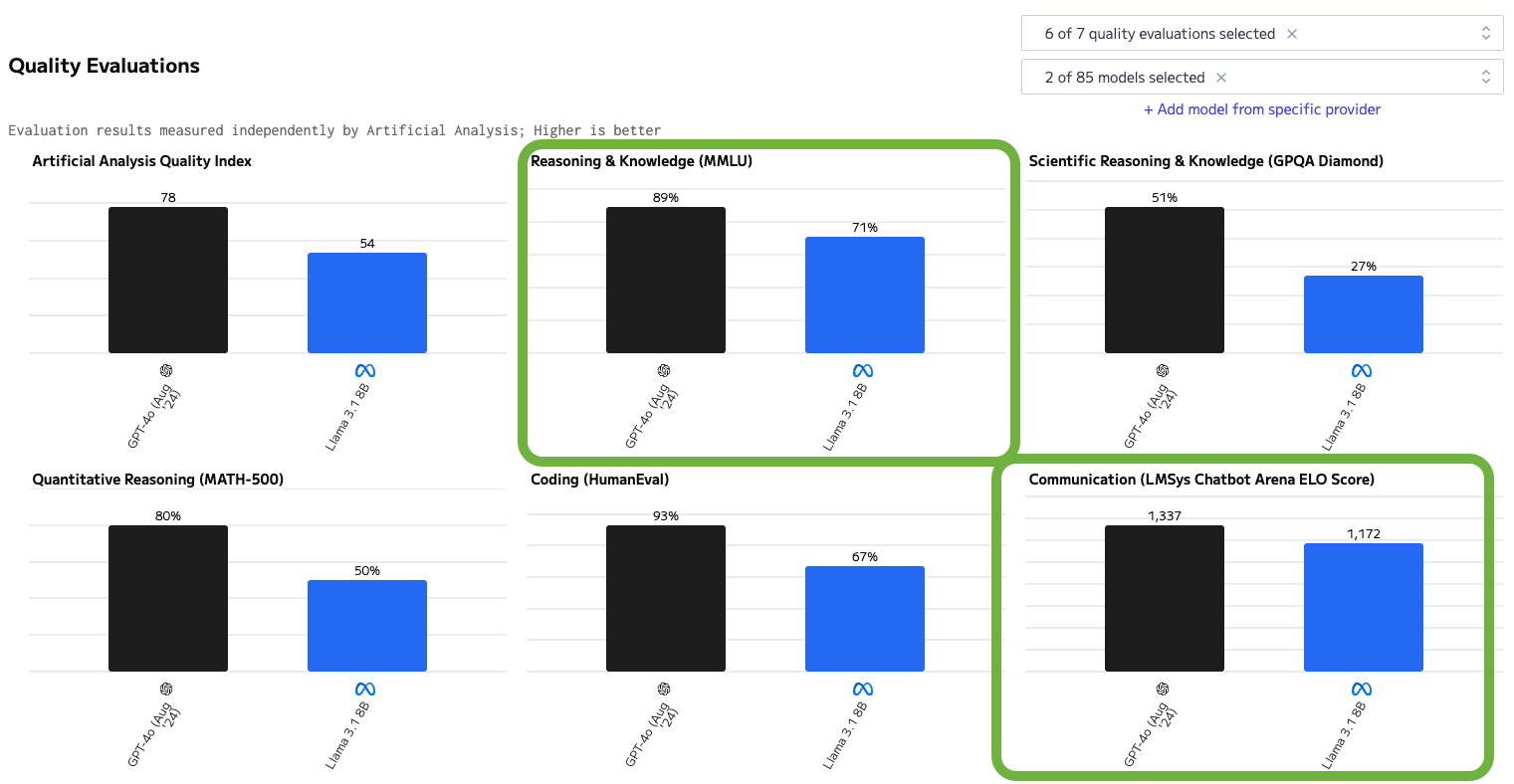

For instance, the LLama 3.1 8B model performs comparably to ChatGPT-4o in text summarization tasks but is x29 cheaper on average ($2.9 vs $0.1 per million tokens where input to output ratio 3:1) and operates more than twice as fast.

Summarizing a five-email thread with 3,040 tokens using ChatGPT-4o costs $0.00865 per request, leading to monthly expenses of approximately $1,141 for a company with 300 employees processing 20 emails daily. In contrast, LLama 3.3 70B handles the same workload for just $39 per month, while ensuring data privacy by operating entirely within the organization’s infrastructure.

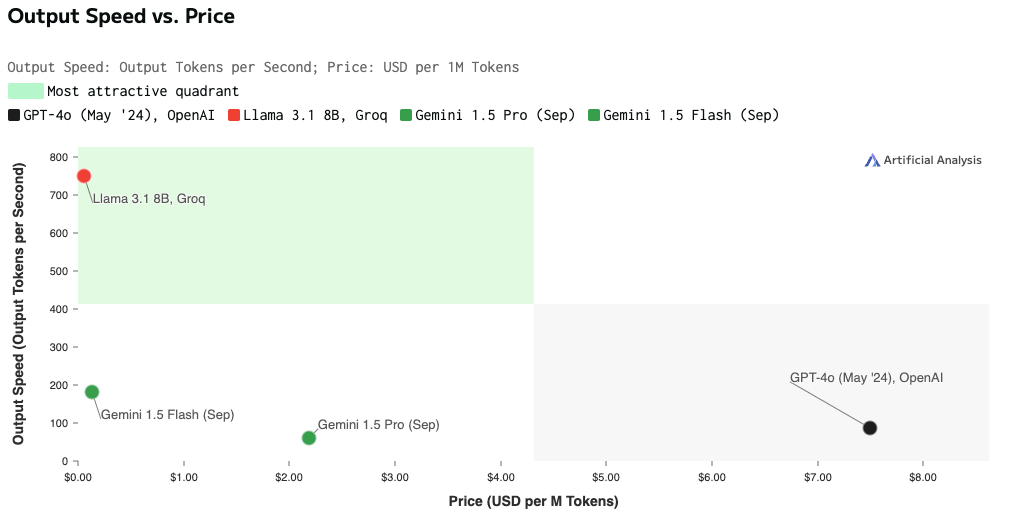

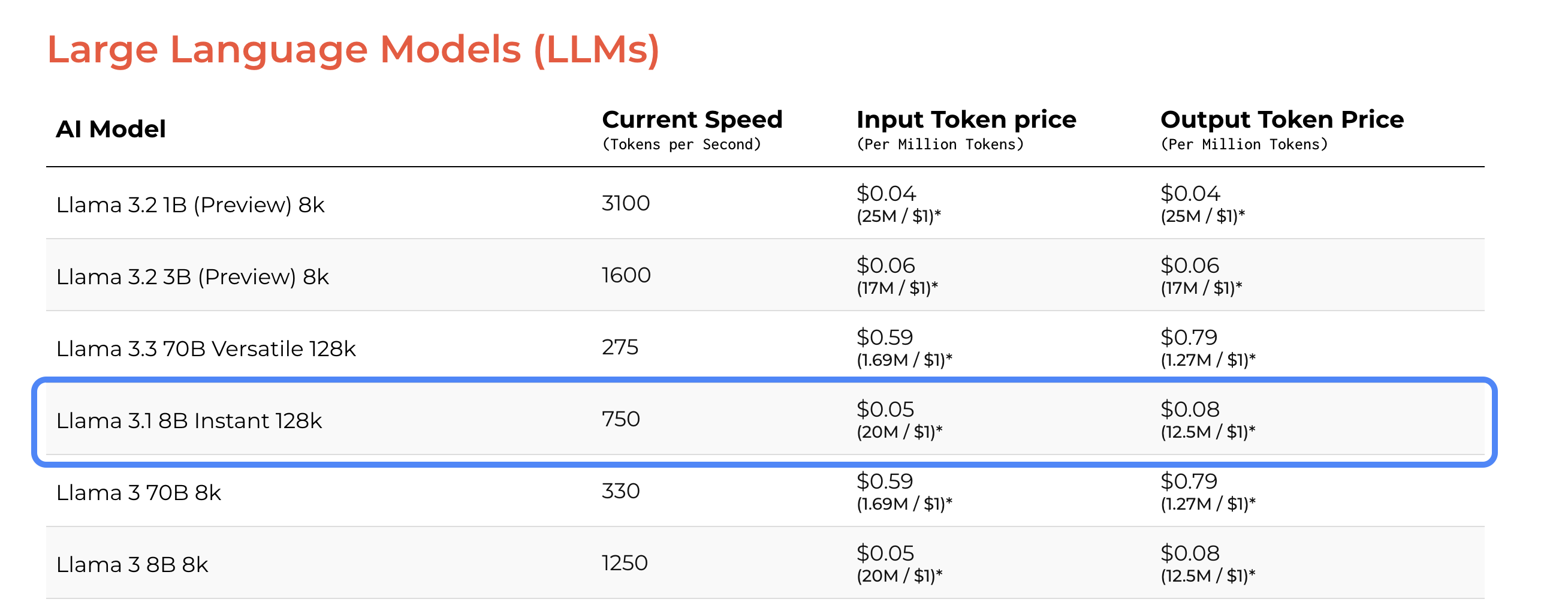

While average data serves as a solid benchmark, Groq takes it a step further by offering even more competitive pricing and enhanced speed. Groq’s LLama 3.1 8B delivers an impressive 750 tokens per second at just $0.06, maintaining the same 3:1 input to output ratio. This makes it 48 times cheaper than OpenAI’s GPT-4o.

If your organization handles numerous tasks that small machine learning models excel at—such as summarization, extraction, and data clustering—consider this example: managing 1,000 small AI tasks per day for each of 300 employees would cost the company $57,000 per month using ChatGPT-4o. In contrast, utilizing Groq’s LLama 3.1 8B would reduce the monthly cost to less than $1,200.

This example highlights the cost and efficiency benefits of smaller, domain-specific models like LLama. As businesses rely more on AI for everyday tasks like summarizing emails, the annual cost of using AI for just 10 similar tasks can quickly surpass $100,000. Selecting the right tool is crucial to keep expenses in check and protect data privacy. With AI adoption still in its early stages, these considerations will only grow in importance as organizations implement more AI-driven processes.

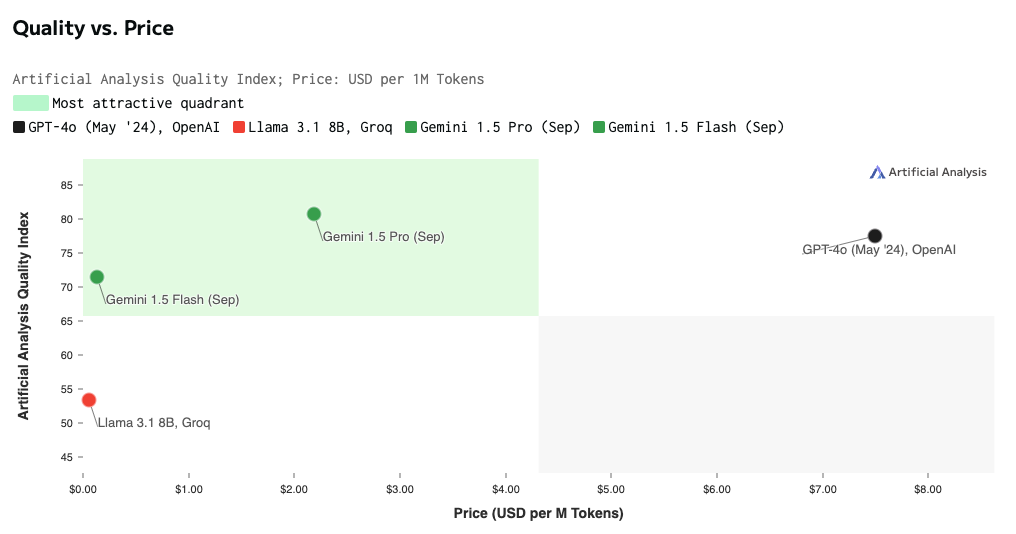

That said, Google is pushing back with its cutting-edge Gemini models, focusing on competitive pricing. Recently, they significantly reduced their costs: Gemini 1.5 Pro is now priced at approximately $1.50 per 1M tokens—about half the cost of GPT-4o. Meanwhile, Gemini 1.5 Flash is up to 10 times cheaper than the Pro version, yet it excels in tasks like summarization and text extraction, making it a cost-effective choice for specific use cases.

2. Improved Latency:

SLMs deliver faster response times, which is critical for real-time applications such as customer service chatbots, voice assistants, and dynamic content generation. Recent advancements in small-scale models, such as SmolLM2-1.7B-Instruct, showcase their ability to operate efficiently on edge devices. These models excel in real-time applications like customer service chatbots by delivering immediate and accurate responses. For instance, a logistics company can leverage such technology to provide up-to-the-minute shipment updates, significantly enhancing customer satisfaction by reducing response times and addressing inquiries effectively.

3. Accessibility and Adaptability:

At EduLabs, we understand that SLMs, with their smaller computational footprints, are highly versatile and can be seamlessly deployed on devices such as smartphones, IoT systems, and edge computing platforms. These models are uniquely adaptable, making them ideal for tailoring solutions to specific use cases and datasets. For instance, a retail business might employ a fine-tuned SLM to study regional shopping patterns and offer personalized product recommendations, enriching the customer experience. Similarly, healthcare organizations can leverage SLMs to develop compact diagnostic tools that work efficiently in low-bandwidth rural areas, ensuring essential services are accessible to underserved populations.

4. Security Benefits:

SLMs offer organizations the option to run them within their private data centers, enhancing data privacy and providing greater control over sensitive information. Financial institutions adopt SLMs to process customer data securely without relying on external servers. Open-source small language models are often favored by organizations that prioritize regulatory compliance and data sovereignty, offering a safe way to deploy AI while retaining full control of proprietary information.

The Key Advantages of Using Small Language Models

At EduLabs, we believe small language models (SLMs) are a game-changer for businesses aiming to achieve better outcomes. Built on the same foundation as large language models (LLMs), SLMs are tailored to specific use cases by training on fewer parameters. This focused approach enables SLMs to deliver accurate answers, minimize errors, and function with greater efficiency. Compared to LLMs, SLMs are faster, more cost-effective, and environmentally sustainable, as they require significantly less energy to operate.

SLMs don’t require massive clusters of AI-processing hardware. They can run on-premises and, in some cases, even on a single device. This eliminates the need for cloud processing, giving businesses more control over their data while ensuring compliance with privacy regulations. For instance, a healthcare provider could use an SLM to analyze medical records locally, enhancing both privacy and speed. Similarly, a legal firm might use an SLM to review sensitive documents without relying on external servers, minimizing risks.

SLM Use Cases: Transforming Businesses Across Sectors

Small Language Models (SLMs) are making a significant impact across industries by addressing specific business needs with precision and efficiency. Here’s how they’re driving transformation:

Customer Service:

SLMs can be used for rapid customer sentiment and complaint analysis, ensuring data stays within the corporate firewall. They generate valuable summaries that integrate into customer relationship management (CRM) products to improve resolution actions. For example, a global e-commerce company can use SLMs to automatically classify and prioritize customer inquiries, improving response times and customer satisfaction.

Healthcare:

SLMs can be deployed to generate personalized health recommendations or educational materials tailored to a patient’s medical history, treatment plan, and preferences. For instance, an SLM-powered application could provide patients with dietary advice, medication reminders, or exercise plans based on their specific conditions, all while ensuring sensitive data stays within secure systems. This approach not only enhances patient care but also encourages better adherence to treatment plans, ultimately improving health outcomes.

Finance:

SLMs can analyze patterns in transaction data to identify unusual or suspicious activities, flagging potential fraud in real-time. These models operate directly within a bank’s secure systems, ensuring sensitive financial data remains protected. For example, an SLM could monitor transaction logs to detect anomalies such as irregular spending patterns or multiple failed login attempts, enabling financial institutions to respond promptly and mitigate risks effectively.

Retail:

Another use case for SLMs in retail could involve optimizing inventory management and restocking processes. By analyzing sales patterns, seasonal trends, and local demand, SLMs can predict stock requirements with precision. For instance, a retailer could use an SLM to forecast which products will sell quickly in a particular region, ensuring timely restocking and reducing excess inventory. This approach helps streamline operations, minimize waste, and improve customer satisfaction by maintaining the availability of popular items.

Making the Right AI Choices: A Guide for Executives

At EduLabs, we recognize that business adoption of AI won’t be one-size-fits-all. Every business will focus on efficiency, selecting the best and least expensive tool to get the job done properly. That means choosing the right-sized model for each project, whether it’s a general-purpose LLM or smaller, domain-specific SLMs, to deliver better results, require fewer resources, and reduce the need for data to migrate to the cloud.

In today’s environment, where public confidence in AI-generated answers is critical, trusted AI and data are mandatory for the next wave of business solutions. We believe smaller and domain-specific LLMs provide a smart and reliable solution for addressing business challenges. You don’t always need to use a cannon to kill a mosquito—some problems can be efficiently solved with simpler tools like open-source models such as Mistral, LLaMA by Meta, or SmolLM. These models are particularly effective when handling numerous smaller tasks, where using larger models like ChatGPT or Claude could result in unnecessary complexity and expense. However, for challenges requiring broader knowledge and deeper context, large-scale LLMs excel. The key lies in orchestration—choosing the right tool for the specific problem, minimizing costs, and maximizing efficiency, as any successful business would. Leaders who invest in these advanced solutions will be well-positioned to optimize AI, remain competitive, and accelerate growth in their specific market sectors.

How EduLabs Can Help

At EduLabs, we are here to help businesses navigate the evolving AI landscape. Whether you’re looking to implement small language models, large-scale solutions, or a mix of both, we offer expert guidance and services tailored to your needs. Our offerings include:

- AI & ML Consulting: Get expert advice on how to integrate AI and machine learning into your business strategies effectively.

- AI Development Services: We specialize in building customized AI solutions that address your unique business challenges.

- AI & ML Training Programs: Empower your team with hands-on training in AI and machine learning to build internal expertise.

If you’re ready to take your business to the next level with AI, contact us at EduLabs. Together, we can create innovative solutions to help your business thrive. Visit us at EduLabs for more information.